Trick or treat!

Happy Halloween party people! Hope your day is full of treats, and minimal tricks.

I will say, there is plenty to be frightened of this Halloween - I think specifically about all the talk of the "AI bubble." This video by GamersNexus - aptly titled "WTF Is Going On?" - I think is an incredibly succinct summary of the issue:

I admittedly was too young for the dot com bubble, but all the talking points are the same: incredible valuation for companies that have no feasible path towards actual profit.

I'm not the biggest advocate for AI by any means - I applaud companies like Anthropic and their stated mission towards building artificial intelligence safely and with considerable thought for its impact towards the broader world. But the truth is that the space is currently a money printing machine, and its attracted some bad actors. OpenAI's launch of Sora 2 earlier this year - in a thinly veiled "private beta" which required users to have an access code, which quickly spawned online communities where codes were shared broadly - represents their shift from a company who aimed to "develop AGI safely and share its benefits with humanity" towards a company chasing the next shiny feature release to keep investors excited. Of course, the less said about Elon Musk and xAI, the better, but they too have been more perverse in their exploitation of the space and the chasing of investor dollars by any means necessary.

This isn't to say that I think Anthropic will change course similarly - they are, after all, founded by ex-OpenAI folks who were dissatisfied with the direction the company was taking. But it serves as a case study of the fact that those who state they are trying to build responsibly are few and far between - and those who do pursue this avenue fall incredibly short in terms of market share.

At Sandstorm, I started our AI Committee after early discussions in 2023 on where AI was heading and how it would impact our work with the stated goal of ensuring we were implementing AI responsibly - not only for our clients, but also for our own internal uses; making sure we weren't displacing real human beings with artificial intelligence. I think everyone in the tech space is skeptical and, frankly, nervous about the impact AI will have on the sector. I don't personally believe we'll be replacing human workforces with artificial intelligence any time soon - the level of compute required to power the current ecosystem is so vast that it has sworn enemies like NVIDIA and AMD teaming up to produce chips - but I do believe it is something we'll have to grapple with in our lifetimes.

I think about a story my father has told me countless times of the internet in its early adoption. As part of a cutting edge solution in late 90s/early 2000s, the company he worked for acquired their own web server - a massive hunk of metal and transistors that took up an entire storeroom of space, and with a hefty price tag of half a million dollars to boot!

What did their company get in return for such an investment? 500MB of storage capacity. Within just 5 years, the server found its new home: the garbage bin.

I recall this story because its the one thing I'm reminded of when I read these stories about these massive infrastructure projects taking place to power the big AI players of today. xAI's eyerolling-ly named "Colossus" project currently houses 200k GPUs in a massive industrial warehouse:

As they gloat in their image, construction of the datacenter took only 122 days. As anyone in the tech space will tell you, building fast almost certainly comes at the compromise of quality. As GPU providers continue to hone their tech for AI workloads, I start to daydream about how the demolition of Colossus due to its irrelevancy in a few short years will take even shorter than 122 days :)

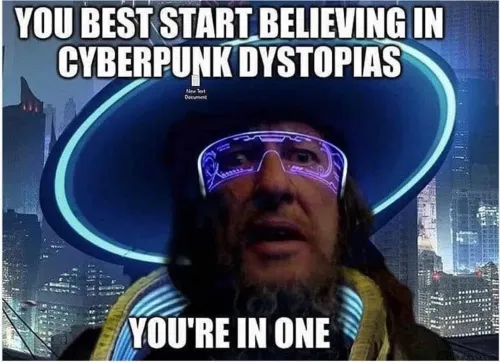

Quite the scary story for this year's Halloween! As the saying goes: